Crossing the Rubicon: Welcome to the Unapologetic Era of "User Generated Information"

How should we think about regulating attention in the name of information rather than purely regulating communication tools?

Back in the early 1900s, the United States government, the Bell group, and a relatively new company called AT&T agreed that access to communication via devices like the telephone and the radio were a public utility. These devices and services connected tens of millions of people across the country. Presidents like Franklin D. Roosevelt would go on to deliver fireside chats to Americans during World War II, and phones were used to update farmers about weather conditions in the days ahead. Communication was the means of spread, but access to fast information was what the government sought to protect. Information then, similar to transportation, energy, and banking, ought to experience heavier forms of regulation because of its necessity.

Then came the internet and everything kind of blew up forever. Centralized distribution of information became decentralized. Paywall restrictions were torn down in the name of free content for all. Publishing didn’t require enormous upfront investment, and not having one sole entry point allowed a competitive industry to sprout. Audience development touched upon the virality the accompanied posts spread from person-to-person, not just circulation as a means of controlling attention. All of these changes made it so that the flow of information and those who want to monetize it became less about vetting for importance and more about vetting for attention. The internet was hardly new to this — hell, you could argue that Martin Luther developed this concept in the years after posting his 95 theses to the gate of Castle Church in 1517, using access to a printing press and monopolization of printers around Wittenberg to capture people’s attention regardless of whether the information was useful (theological concepts) or designed to go viral (rebuttals to his critics). This was echoed by pamphlet writers in the 1700s and 1800s, in the cable TV networks of the ‘80s and ‘90s, and found the right technology with the internet.

Our information spaces are constantly changing, and their rules are constantly rewritten. Never, however, have they been so pervasive, so invasive, and their overseers so evasive.

Taken from a macro perspective, which is the only way to explore this topic without writing a series of books that would become outdated the second they publish, there were four major cultural, technological, and infrastructural events that created our current information environment.

Lower costs eliminating barrier of entry to accessing and distributing information

Democratization of supply (posting) and demand (blogs, social media, email) of information and entertainment

Decline in traditional news owners as information moved from an authoritative commodity to shared experience and amateur expertise (Popular Science into subreddits)

Mobile computing that catered to a 24/7 cycle of user generated information

History has a funny way of repeating itself though. For all that everything was blown up with the arrival of the internet, so much of what’s happening is reminiscent of the earliest telecommunication movements of the late 1800s and early 1900s. The biggest difference, however, is that communication companies weren’t information companies. They carried access to those information companies. AT&T didn’t own news until it purchased CNN via its acquisition of WarnerMedia (now Warner Bros. Discovery). AT&T was labeled a public good — a utility. It was subjected to more regulation, and new bodies like the Federal Communications Commission (FCC), were instituted via the Communications Act of 1934 to ensure that AT&T would remain readily available, accessible, and affordable to a growing country reliant on new telephone technologies.

It’s here that I’d argue governments have tried to act fast on regulating new communication technologies as it pertains to the health of the country, but too slow in understanding the consequence of information on those same people they’ve sworn to represent and protect.

The Bigness Problem

Information is a whole other battle. It’s why companies like Meta as well as publications including the New York Times hire very fancy lawyers. Since they are private companies, and don’t receive funding via the Corporation for Public Broadcasting, they receive far less oversight. Both platforms like Facebook and publications like the New York Times also have the protection of Section 230 for comments made on their platforms, meaning they mostly can’t be sued for content that appears if they are showing a strong attempt to remove harmful words and images.

Mostly that’s where the similarities end. The New York Times can be sued for libel for content that appears in its articles as a publisher. Since Facebook isn’t a publisher, it can’t be sued for the same circumstance. (Disclaimer: I am not an expert lawyer on Section 230, so free speech lawyers — check me on that!) Many Section 230 defenders, including myself, argue this is good. Groups like the Electronic Frontier Foundation refer to Section 230 as “the most important law protecting internet speech.” For all the power it gives conglomerates like Meta and Google in protection from an endless parade of lawsuits, it also protects blogs, newspapers, and newsletters (like this one) from the same concerns.

Perhaps the most important difference between a conglomerate like Meta and a publisher like the New York Times, both of which deal in the business of capturing attention and transmitting information directly to audiences, is one three letter word: big.

Over the last week, following Meta’s strongly criticized content moderation changes, I’ve spent almost every minute of my day thinking about the problem with bigness. People typically pay attention to a new technology, a new format, a new group, or a new behavior when it becomes too big to ignore. Understandably. Countries pay attention to growing militaries. Companies pay attention to growing technologies. Governments pay attention to growing corporations. Growth comes from subsuming. Devouring competitors, conquering neighbors, and controlling information. Inevitably, at some point, cracks begin to appear in the very foundation once the entity in question becomes too big. Non-stop growth always leads to self-immolation.

Incentives for bigness also lead back to methods of controlling and perpetuating that enormity. As many journalists have pointed out, Meta C.E.O Mark Zuckerberg flip flops on policies that dictate what platforms like Facebook and Instagram should adhere to depending on what the administration in power wants to hear, not necessarily what he thinks Meta should do. Some have argued this is because Zuckerberg doesn’t know what Facebook is; I agree, but I’d add that Zuckerberg doesn’t care what Facebook is so long as it’s the biggest. In the context of information ownership, not just for a country but for the world’s population, this can theoretically lead beyond misalignment with what’s beneficial for the greater good compared to what’s popular. Worse, it can take on a new role of information suppression under the guise of free speech.

The only grand weapon the public can arm itself with is information, but what happens when it’s an information carrier that’s gotten too big?

One of the great theories about Rome’s republic falling, beyond the advent of political propaganda, political violence, and power hungry egotistical demagogues, is that it got too large to govern. Chaos follows scale. Combining self-interests governed by immense wealth and power, influence over unprecedentedly large bodies of people, and destruction of trust in institutions ultimately leads to two things: unrest and faithlessness. The only grand weapon the public can arm itself with is information, but what happens when it’s an information carrier that’s gotten too big?

Trying to govern the internet, as I wrote in last week’s Halftime, is an impossible task. It’s become too big to do much of anything but try and contain the fire before the blaze wipes out everything in its path. Increasingly, what is becoming arguably one of the only questions, and something that the Federal Trade Commission (FTC) is currently suing over, is what happens when the very concept of the internet, a decentralized information portal, starts to look more concentrated under one company? If Meta’s platforms exist as the internet for many, how big is too big?

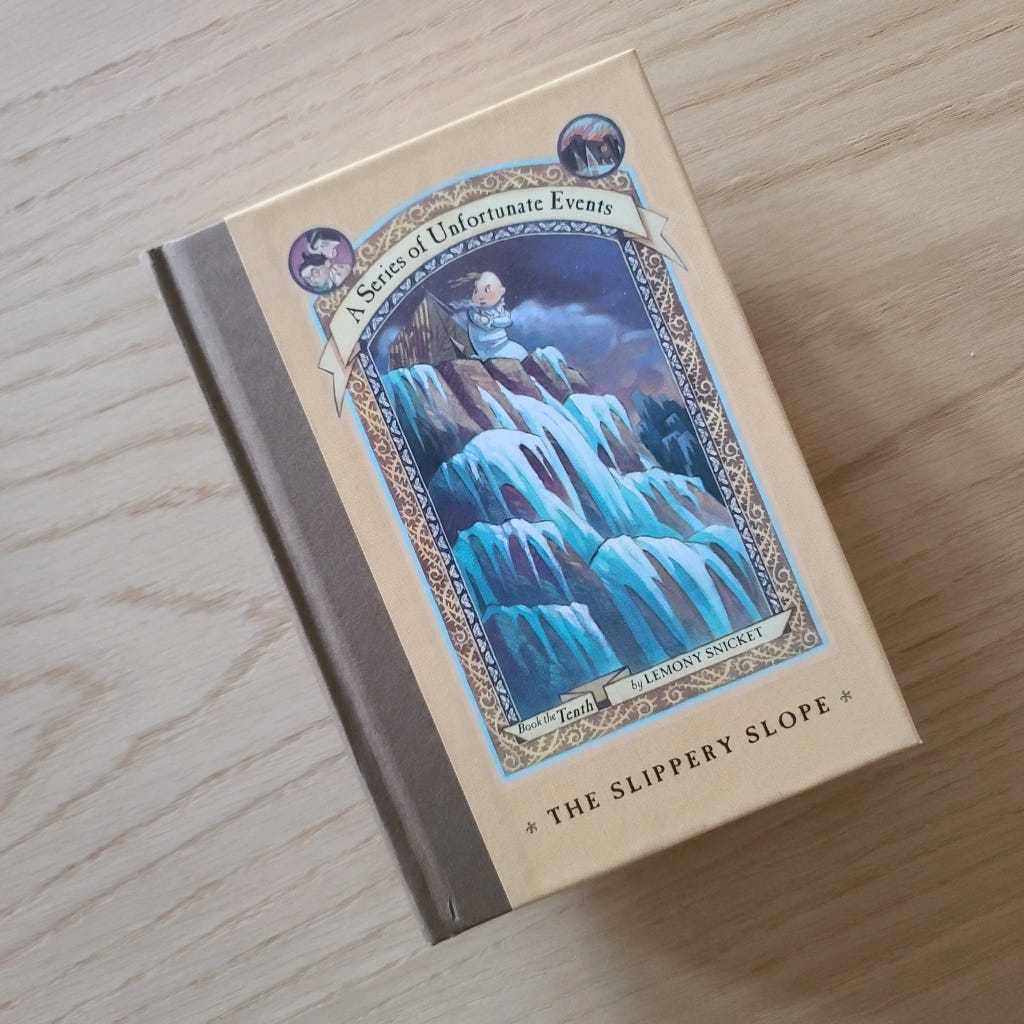

Climbing Up a Slippery Slope

A slippery slope, as I said last week, is the phrase that you’ll hear over the next several years — from both sides — and it’s certainly the one bouncing around my head. So much of the response I’ve seen to Zuckerberg’s announcement perpetuates that Zuckerberg is enacting these new rules to please President Trump, a kind of submission that a near autocratic-minded politician would find enormously endearing. Trump even blatantly told reporters that Zuckerberg probably introduced the new policy because of him. It’s not difficult to see why. Just look at some of the moves that Zuckerberg and company have made in the lead up to Trump’s inauguration:

Notable conservative Joel Kaplan is stepping into a global policy leader position at the largest social media company in the world ahead of Trump’s inauguration

Meta’s move to publicly scale back moderation by eliminating fact checking comes ahead of Trump’s incoming Federal Communications Chair nominee, Brendan Carr, previously asking Meta to do so

Meta C.E.O. Mark Zuckerberg also specifically cites the changing culture as a reason to not clamp down on posts

Donated $1 million to Trump’s “inauguration fund”

UFC C.E.O. and Trump compatriot Dana White was appointed to Meta’s board

The move led to the removal of negative and critical internal comments about White’s appointment

Yes, that’s despite Meta no longer fact checking public posts

Eliminating all diversity, equality, and inclusion (DEI) efforts

Continued its lobbying efforts to prevent TikTok from operating in the United States

I don’t doubt there is certainly an element of a man bowing to an incoming king with the presentation of a sacrificial lamb in order to ensure that the watchful eye never hovers over his own house. Again, it’s not difficult to see why. Here are just a couple of major issues facing Meta in the months ahead, laid out by my friend Taylor Lorenz at User Mag, that having Trump on the side of Team Zuckerberg would certainly help with:

Meta is facing an antitrust trial in April, and the company has lots of other business on the radar of the U.S. government.

Trump has threatened to send Zuckerberg to prison for "the rest of his life”

Brendan Carr, Trump’s new FCC chairman, aims to regulate tech companies like Meta by curbing Section 230 protections and increasing oversight

…and this doesn’t even touch upon Meta’s race to become the dominant force in VR, a major AI player with its Llama large language learning model, and continue meeting shareholder expectation — not value — by increasing the number of people using Meta products (Facebook, Instagram, WhatsApp, Threads, Oculus) from 3.4 billion people, or 41% of the world’s population, to even more

All of these problems that Meta is facing, however, weren’t thrust upon the company because of a particularly left or right leaning government. Meta wasn’t just picked at random to be made an example of, despite what the company’s lawyers may argue. Meta focused on bigness. Executives defined success by the subsuming of competitors, by the incremental increase in seconds someone spent with their platforms and not others, by the ability to control the attention of a global audience and the information they see. Most of this isn’t new. Antitrust laws emerged from the need to prevent imperial takeovers that harm the greater good by limiting societal growth in the name of corporate growth.

Here’s where it gets complicated. By all definitions, companies like Meta and X have allowed for the largest forum for public free speech in history. Extending that argument, this was allowed to happen in the free market. One part of the slippery slope is the assumed level of government interference in business.Think about the evolution of information in the United States, not communication evolution. AT&T controlled a portion of NBC during the heyday of radio and early television, and used its content to transmit exclusively on its wires. Its position as a monopoly all but prevented real competition via a rapidly adopted new communication technology.

Put another way, the near vertical integration of NBC (information) into AT&T (communication) drastically limited the type of information radio listeners and TV viewers could receive. Concentrated centralized media environments led to the rise of cable, or a need for a more decentralized pro-consumer ideology. President Nixon helped to champion the new television landscape, believing that more TV stations stealing more people’s attention would lead to less overall coverage and attention to issues like the Vietnam War and Watergate. Nixon didn’t predict CNN. Nixon also didn’t predict that companies like AT&T or Comcast would still act as the arbitrators through “editorial” judgments about what channels to carry. Still, cable was an important development. It helped spark the radical nature of the decentralized internet from its early days.

The internet is arguably as decentralized and open as it is today because of the immense concerns and laws following the undeniable monopolization via centralization of communication and information in the prior century.

The slippery slope I see is the tectonic plate that Meta sits on as the most powerful media company (information) in the world and one of the largest communication connectors. What is Facebook? What is Instagram? What is Threads? We call them social media, but they are information portals. This move reaffirms Zuckerberg and global policy chief Jeff Kaplan’s philosophies, and certainly many others’, that access to all information is better for the common good than restriction to some information.

Time and time again, Zuckerberg has proved he doesn’t really know how to handle the magnitude of what he’s created. His entire project started as a game to rank college women in Boston. The reach that he had in mind with Facebook’s original concept was the largest world he could imagine at the time: the ivy league. Manageable in nature, exclusive by definition but, and this is important to Zuckerberg’s story, conquerable. Now he’s trying to determine how to make more than three billion people happy, how to keep them hooked on his platform, and how to not yield any monopolistic power he has in the information space.

If you were still in doubt about Zuckerberg’s plans, just look at his wardrobe. The shirt below reads “aut Zuck aut nihil,” which is Latin for “all Zuck or nothing.” Funny, sure. Inspired by Roman caesars? Absolutely.

Attempts to remain as big as possible have led to a few different strategic positions. Frequently in the past that has looked like Zuckerberg trying to work with the government. More active regulation would certainly help Meta in some respects. For example, it would be harder for regulators to try and break the company up as it is effectively institutionalized, sanctioned by the government itself. In 2019, Zuckerberg argued in a Washington Post op-ed that he believed the internet needed a more “active role for governments and regulators,” he wrote. “By updating the rules for the Internet, we can preserve what’s best about it — the freedom for people to express themselves and for entrepreneurs to build new things — while also protecting society from broader harms.”

But what this week’s announcement demonstrated is that neither Zuckerberg, nor people inside Facebook’s policy team, can agree on what those broader harms are and for who in society they are harmful toward. Nor is there much concrete evidence that the government has any idea, nor should the government have anything close to total power, over what regulating a communications company that is also the largest information supplier in the world looks like.

Perhaps what makes all of this so endlessly frustrating is that every person involved in the conversation, involved in the fight for the information sphere of tomorrow, has a leg to stand on. I like how Jameel Jaffer, executive director at the Knight First Amendment Institute, explained it to The Verge’s Nilay Patel in a 2021 Decoder episode. There are effectively three parties sitting at the table. The social media companies who argue that they are “building an expressive community” and therefore they “should get to decide what our expressive community looks like.” There are people using the platforms who argue that these spaces are new public squares and they should be able to post without “interference on the basis of, for example, a viewpoint.” And then there’s the government that argues it needs to “protect the integrity of the public square” and ensure it “works for our democracy.”

This week’s announcement demonstrated is that neither Zuckerberg, nor people inside Facebook’s policy team, can agree on what those broader harms are and for who in society they are harmful toward

All of which brings us back to the original Meta content moderation question. Does this move benefit the vast majority of people represented in each bucket? Unfortunately, many of us know the correct answer even if it’s the impossible one. Bigness is the problem. If Rome couldn’t prevent its downfall due to the egregious actions of men guided by a sense of ruling a kingdom that couldn’t be contained, what hope does someone like Zuckerberg — or anyone in his position — have of trying to stop flames spreading to the furthest corners of the world at one trillion times the speed? Google runs into the same issue with its search engine and YouTube. It’s not just a Meta problem, it’s a bigness problem. Meta is just the first company to acknowledge it, and say “fuck it” instead of finding ways to lessen the load it carries.

This isn’t a conversation about left or right wing politics as much as we’d prefer the simplicity of that lens. The Right will argue that how the Left feels today about last week’s developments is how they felt when they were being “silenced” through more active moderation eight years ago. We can, however, evaluate what’s harmful to groups of users much like we can argue what is harmful to democracy. Fortunately, we have some recent historical precedent to look at for Meta. Consider that since Elon has taken over X, rolled back content moderation, and introduced Community Notes instead of relying on fact checkers, we’ve seen:

Hate speech “surge” on Twitter in the weeks following Musk’s takeover, according to a joint study released in June 2023 from UCL, USC, University of Oregon, and UC Merdon, and as reported by Wired

Bots didn’t decrease in activity, despite that being a number one concern touted by Musk

Violent anti-semitic content only made up 2% of all social media content in 2022, but 90% of that percentage base came from Twitter, according to CyberWell

Publications like NPR and the CBC were labeled as “state media,” which is a tag usually reserved for state-owned propaganda machines out of Russia and China

Ironically, actual state-controlled media accounts began to flourish post-Musk’s takeover and the decrease in content moderation, according to a 2023 study by Atlantic Council’s Digital Forensic Research Lab

Analysis from the Center Countering Digital Hate “showed that Twitter Blue was being weaponized, particularly being taken up by people who were spreading disinformation,” according to Wired

X’s percentage of daily app users dropped by more than 20% between November 2022 and February 2024, according to SensorTower

Some additional truths we know about social media. A team at the Stanford School of Humanities and Sciences discovered in a 2024 report that negative posts from extreme left and right leaning outlets or influencers were 12% “more arousing” than others, and these were the most likely to go viral on platforms like X. Similar reports from the National Health Service in the United Kingdom found that heightened attention to content that is obviously or perceivably more negative caters toward a negativity bias, which leads to more sharing from accounts not necessarily inherently or perceivably more negative. Another study published in the Journal of Nature in 2023 found that the addition of a negative word in a headline or post drove the clickthrough rate of an article by more than 2.3% per every negative word.

These are just a couple of recent studies, but entire books like Max Fisher’s Chaos Machine have shown how again and again the hands-off purveyors of free speech result in two undeniable and destructive truths. Firstly, people trying to turn attention given into attention received post more negatively to appeal to an algorithm that does surface and suppress content even if it’s not in an editorial manner. Secondly, this destroys trust in institutions, including overall government, education, science, and news media, which are all at historic lows, according to Pew Research.

The disincentives for speech a la participation are just as important as the incentives for speech a la participation

Since Meta is following in Elon’s footsteps, including literally pulling from X’s playbook regarding Community Notes, let’s take one last look at X, the site that Zuckerberg arguably (as The Atlantic has written) always wanted. Twitter’s original moderation changes didn’t prevent people with more left-leaning viewpoints from continuing posting, but it did create a hostile enough environment for them not to want to be there. Why couldn’t this happen to Threads or Instagram, and especially on sites like Facebook where private groups may spread genuinely harmful thinking that could theoretically result in physical harm, such as referring to queer people as being mentally ill in states like Florida or Texas.

*The disincentives for speech a la participation are just as important as the incentives for speech a la participation.*

The biggest difference between X and Meta is, of course, its size. Twitter, back when it was called that, was always much smaller than Facebook and Instagram. Twitter boasted around 400 million users in 2022, before Musk took it over. Facebook boasted 2.96 billion. Multiple reports suggest that X has lost users in the years following Musk’s takeover (a stat he has publicly disputed). Meta’s products now reach more than 3.4 billion people. Zuckerberg’s platforms have more going for them — advertising revenue, number of users, global appeal — than X likely ever will, and yet Zuckerberg isn’t happy. What he doesn’t seem to have is perceived influence, and that comes from information not the device of communication.

The moves Zuckerberg and team make are designed to tackle a spot that Zuckerberg seems to not think he’s big enough in: control and influence of information. As we saw with X users migrating to platforms like BlueSky (and Threads), a hands-off approach does intrinsically change the flow of information and those who access it. In 2019, Facebook controlled “more than 80 percent of time spent since 2011, at least 70 percent of daily active users, and at least 65 percent of monthly active users,” of all social media activity, according to Comscore as reported by Wired. Look at Meta’s growth over the last few years and consider the impact on those stats — especially if TikTok gets banned.

When the FTC issued its lawsuit against Facebook, the firm noted that one of the biggest red flags was its “ability to harm users by decreasing product quality, without losing significant user engagement, [indicating] that Facebook has market power.” A decrease in quality but increase in attention as it pertains to information, and as information remains the fist defense against a crumbling democracy, is my biggest red flag. Those who control the flow of information control the future of countries. Zuckerberg, more than anyone, understands that truth. He always has. Now he just has the unspoken permission to cross the Rubicon.