An Accidental, Positive Side Effect of AI Slop

When all you have are piles of shit information, you create scarcity for good information — and there's always a protective layer around scarcity.

First a brief update…

Last week, I published a blog voicing my fears around new incentive structures and opportunities for some gimmicky entrepreneurs to take advantage of in our current generative AI web era. It takes very little for someone to take an answer that ChatGPT spits out in response to literally any question that someone can think of, upload that answer to a Wordpress CMS, re-publish said information with the correct generative engine optimization (GEO) tactics that would lead to ChatGPT referring people back to said site, and profiting off the traffic that comes in each day. Even if the traffic is minimal, it doesn’t matter — the opportunity is great because the risk is non-existent.

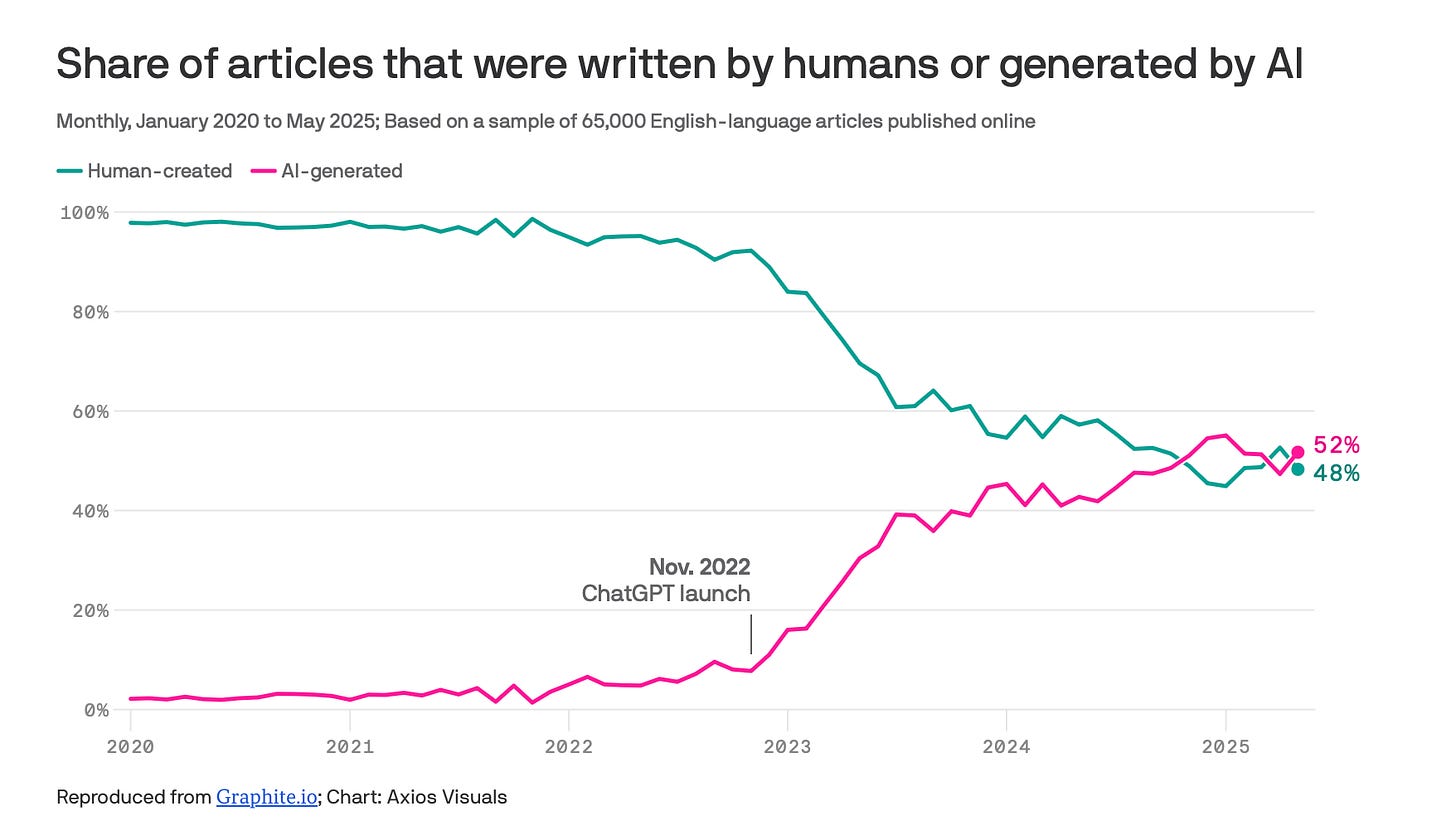

Humans won’t disappear from publishing articles, creating videos, or working on deep research, but the unprecedented speed of publishing, and the unprecedented array of voices, on top of generative AI tools spitting out more and more answers for chatbot tools will equalize the playing field. In fact, one research firm found that over the last five years, the percentage of articles that are written by humans and those written by generative AI are now about equal, according to Axios. Graphite analyzed 65,000 English-language articles published between January 2020 and May 2025; ChatGPT was launched at the end of November 2022, and that’s where we see the shares really start to move toward converging. Graphite labeled genAI articles as those with less than half an article written by a human being, per Axios. (Graphite’s team also noted a 4.2 percent false positive rate versus a 0.6 percent false negative.)

I wanted to touch upon this quickly. Much of what I write about is often through the lens of what could happen, which are conclusions that I draw based on behaviors that seem irrevocable. OpenAI and ChatGPT may or may not be inevitable in this AI-powered, next generation of information collection and distribution, tools like ChatGPT certainly aren’t going anywhere. As I wrote last week:

Approximately one third of people who do use chatbots specifically for news found it “difficult to determine what is true and what is not,” per Pew Research. Not to mention that about half of people who use chatbots for news feel like they often see information that is inaccurate. About 16 percent of people say they often see information that isn’t new, and there’s an additional quarter who couldn’t decipher what’s real and what’s not.

All of these little data points add up to one potentially consequential story: we’ll have more information than we ever thought possible, and we’re about to know where very little of it is actually coming from the deeper the rabbit hole goes. New regulatory efforts, like Gavin Newsom’s recently signed law that would order companies to label AI generated interactions, may be the first step in the right direction. But if we know anything about regulatory relationships to emerging technologies, it’s always the tech guys who win out. So is there hope for a better information future?

Well…

Resurrecting Humanity Within the Dead Internet

Alexis Ohanian, best known as the co-founder of Reddit, has a pretty positive note for most of us spending our days worried about what the state of AI rot will do to our world of information, of connection, of social interaction: if the internet gets so fucking bad that we are repulsed by it, the only thing left standing is the most basic human connection.

“You all prove the point that so much of the internet is now dead,” Ohanian told John Coogan and Jordi Hayes, hosts of the fast growing TBPN podcasts.

Ohanian is far from the first person to argue that an amalgamation of slop will lead to increased value for human intimacy, connection, and authority. Doug Shapiro, one of the foremost experts in this space, penned an entire essay exploring the moat that exists around “premium” content (like a Netflix scripted show or a Searchlight movie) when more content that floods the zone. Part of Shapiro’s argument boils down to three points on what abundance does to the value of scarcity. These include:

Abundances creating scarcities

Abundances amplifying scarcities

Abundances enabling new businesses

It’s the first two points that I’m particularly interested in for this essay on how the dead internet and bot-ifying of information may drive stronger affection, loyalty, and reliance on existing businesses. In entertainment, having to frustratingly navigate through an endless river of slop on platforms people typically gravitate toward when bored — Instagram, TikTok, YouTube — may lend itself to more engagement with traditional entertainment sources whose value increases. Now, I don’t think that Instagram, which just crossed three billion monthly active users, is suddenly going to drop in overall use. After all, there is no incentive for tech conglomerates to worsen their own product, drive away advertisers, and become obsolete.

But those new abundances do reiterate the value in existing businesses, and existing talents. “In media, for instance, lower barriers to distribute content resulted in an explosion of content choices,” Shapiro argued in his essay. “That made curation scarcer, shifting value to the platforms that control the end user relationship.” When it comes to information and media, curation happened through the editorial process, leaving editors to decide what stories were worth printing — what was fundamentally of value to a subscriber or reader. But it also occurred through algorithmic tools. It’s the latter that is consistently iterated upon for the benefit of the platform, not its users, often through surfacing worse information and more engaging rage bait. Although media companies are also interested in increasing circulation and engagement, there is still a process of deciding what gets published and what doesn’t.

Democratizing publishing was excellent in raising new voices and allowing underrepresented viewpoints to find an audience, but those voices were symbiotic with reporting. And those voices arguably increased the value of certain publications — The New York Times, The Wall Street Journal, Bloomberg — as well as put an emphasis on the best reporting collectives — 404 Media, Puck News, The Intercept — that stood out against the horde of content. Although it didn’t stop the impact of content proliferation, of media abundance, in how people accessed information, it did create a moat around some of these companies because of the perceived quality and value.

This gets into Shapiro’s argument that abundances actually amplify existing scarcities. Using the example of concerts, which were already more scarce than CDs or tapes because there were physical limitations to venues and only so many tickets available, these became more sought out by people who have new financial resources because they’ve shifted costs. Streaming (and free music) replaced the cost of CDs, and those costs could be shifted toward live shows. Shapiro then adds, as I have argued in the past about these types of events, “the social signalling of the scarce thing goes up as the alternative becomes commoditized, especially in our increasingly social world.”

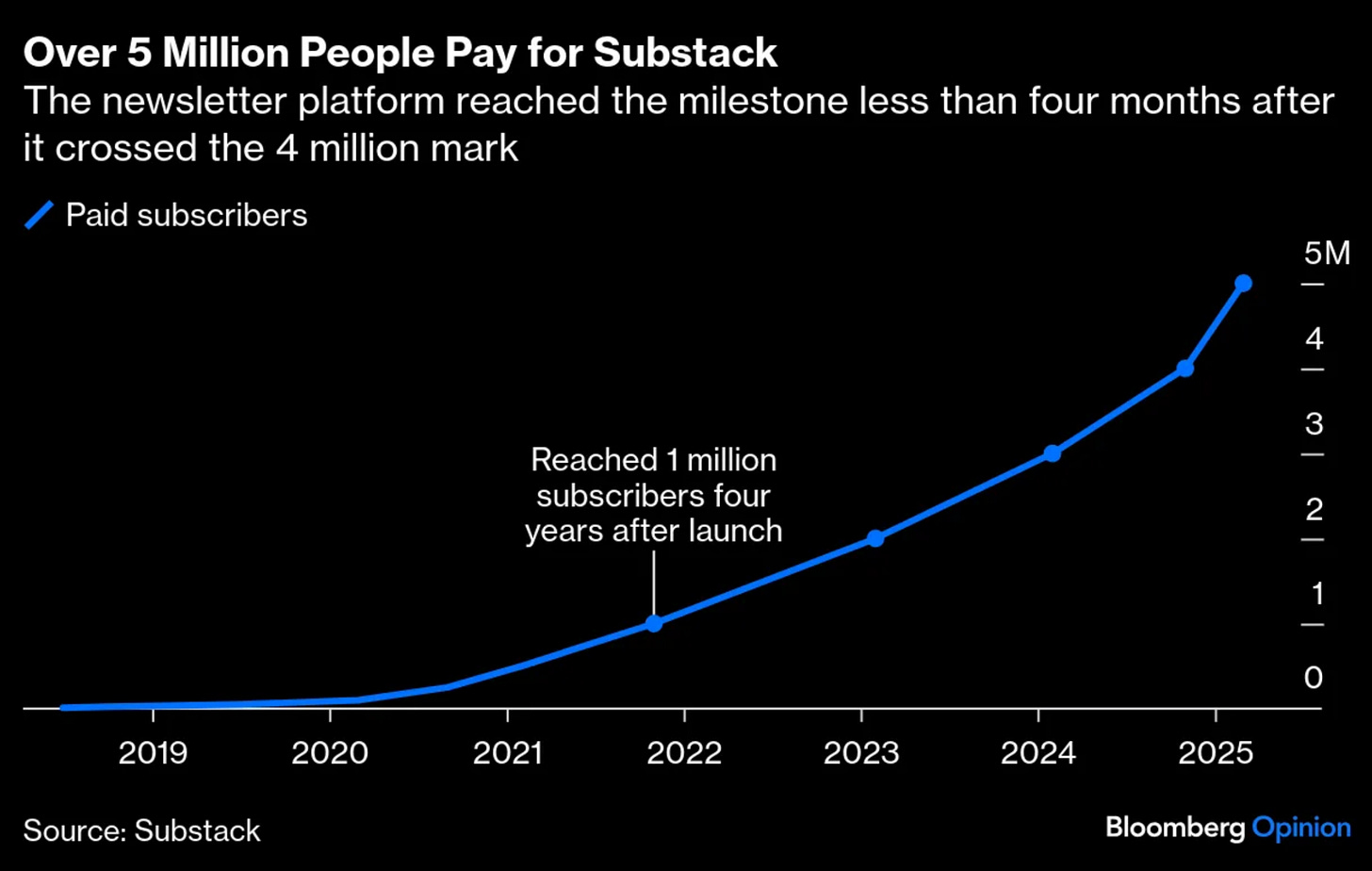

In an information setting, what does amplifying value look like? Some of that answer is found in Substack and the rediscovered newsletter ecosystem. People are spending less on information as a whole — books, magazines, newspapers — so now that cost can transfer to sources of specific information where additional value is placed by the reader. Someone may subscribe to Hasan Piker, for example, or Taylor Lorenz to get access to information from someone who they admire and speak to their specific interests. Of course, power laws come into play (meaning that the top few percent make up around 90 percent of all subscriptions), but that doesn’t change the overall consumer behavior change.

Of course, there’s certainly proof that tools like ChatGPT are invading these spaces, but this is where the quality meter and subscriptions help to differentiate the most valuable from slop. If information is shoddy, if writing styles aren’t captivating, people won’t pay for those Substacks. Although there is increased competition, there is still a moat around quality and that quality (including accuracy and expertise) are amplified. Democratization in both content creation and distribution doesn’t make the value proposition equal. The issue, as many independent creators know, is on the discovery side.

And that brings us to Shapiro’s third point: that abundance creates new business opportunities. In his example, he points to broadband internet and more connectivity effectively creating “free bandwidth.” As Shapiro notes, “bandwidth used to be extremely expensive. The emergence of essentially free bandwidth created literally trillions of dollars of value because it birthed streaming media, cloud computing, and SaaS [software as a service] business models, among other things.”

But how does that translate to media? If increased content means bigger discovery issues, and curation is relegated to algorithmic recommendations, then isn’t media still in the same dire place as before…and by before, I mean our current moment?

The Art of Subversion

When you think about how information breaks through in a currently crowded space, there’s one word that sticks out to me again and again: subversion.

Think about how good information appears on cluttered feeds like Instagram and TikTok today. It’s a repeatedly used format. A 45-second video starts with some piece of information, typically spoken from someone with an account labeling them a doctor or expert of some kind, or it’s a clear podcast set-up. There’s a soundbite designed to invoke a reaction out you: disbelief, curiosity, agreement. And then it cuts to another expert, who uses their own expertise or data to debunk the clip that you just saw. They rarely link to the other interview, they rarely allow for duets with the dunked upon video. Clearly, the entire mission statement of this account is to cut through the cacophony of noise and misinformation on the internet in an attempt to have actual truth break through the noise. This in turn creates new business opportunities.

Here’s the tricky part: who is a verifiable expert, and who’s not? Whose word is more sound over someone else’s? We didn’t just democratize information and content with the arrival of the internet, but we incidentally democratized expertise. But, if we look at Shapiro’s three rules for abundance and scarcity, and as we see even more information proliferate through these mediums thanks to new generativeAI technology, my hypothesis is that it will embolden a percentage of people to actually seek out proven expertise, proven fact, and proven science or reliable reporting.

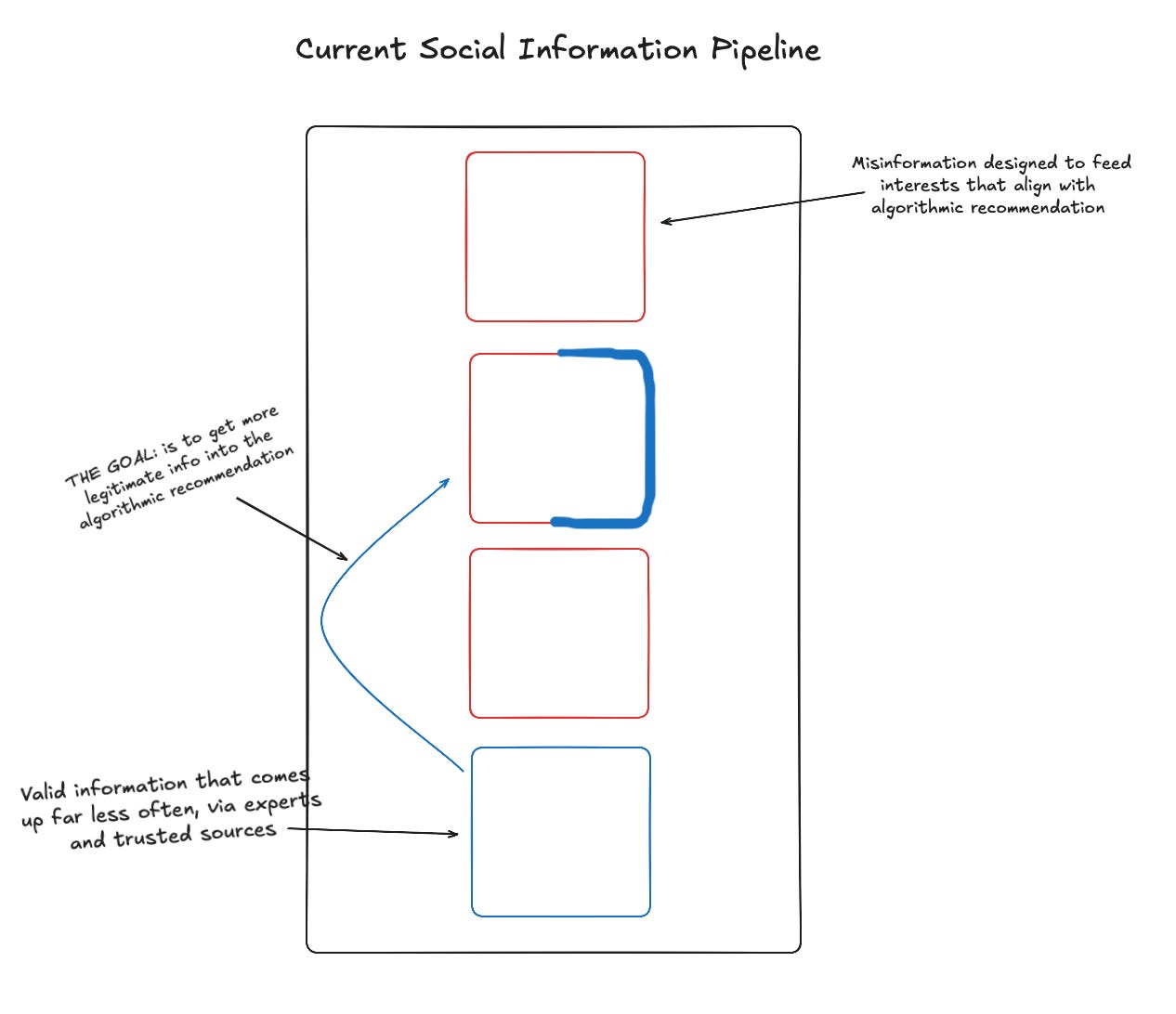

Trying to break through those implanted discovery mechanisms, like reliance on the algorithmic feed, to reiterate the importance of authority, expertise, and reported truth instead of accepting suave misinformation requires subversion of format. And subversion may help move a percentage of people to incorporating more trusted sources in their information habits. Nothing is going to replace hyper personalized videos and voices targeting our most inherent, innate beliefs, but a plethora of slop may, ironically, create the perfect conditions for those trusted sources and voices to command renewed attention on those same platforms and help to level out the inaccuracies. It looks a little like this:

Proof of concept for this type of subversion working is important. And for that, I turn to Doctor Mike.

Mikhail Oskarovich Varshavski, better known by his insignia “Doctor Mike,” dedicates his entire YouTube, TikTok, and Instagram channels (a combined 10 million subscribers and followers,) to correcting medical misinformation. As the internet shifts to one that favors video, and specifically short form video, and the way that impacts the way we seek out information, changing from a Google search to a query in TikTok, the more integral short form video platforms become to our ability to seek out, find, and consume information about inane things like movie reviews as well as critically vital resources about governmental affairs, human rights issues, and medicine. He can’t react to every insane video he comes across or is sent to him throughout his day, but he harps on the most egregious claims that are finding traction.

One particular exchange between Varshavaski and Dr. Steven Gundry sticks out. Gundry is a cardiologist and nutritionist who has developed a strong following amongst conspiracy theorists for comments he’s made about the issues with plant-based diets and allegations that the mrNA COVID vaccine leads to heart inflammation, the latter of which received a published note of concern from the American Heart Association. Gundry is the exact type of personality that works well on the internet. He’s board certified, so there’s a perceived validity to his opinions because he has the right pre-requisites. He’s a published author, so there’s a perceived established expertise to his ideas. And he’s a podcast host who understands how to use TikTok, Instagram, and YouTube well, making him accessible and easily discoverable to individuals’ who are already watching related content. In one Instagram Reels clip that has more than a million views and 4,000 comments, Varshavaski and Gundry are talking about sugar content in grapes compared to a Hershey’s chocolate bar.

“You said grapes are sugar bombs that are problematic,” Varshavski says at the beginning of the clip posted to his channel. (This is an important distinction; when consuming information primarily through short form clips, who’s posting, and therefore framing said information in said clip owns the context and slant audiences encounter.)

“There’s as much sugar in a cup of grapes as there is in a Hershey’s candy bar,” Gundry replies.

“But that requires nuance,” Varshavski rebuts. “A child hears, a mother hears ‘grapes are sugar bombs, might as well give them Hersey’s,’ they will give them Hershey’s.”

“Might as well,” Gundry says, with a sly grin.

“Don’t you think grapes have more nutrients than Hershey’s?”

“Believe it or not, extra dark chocolate has some of the highest polyphenol content —”

“But we’re talking about milk chocolate,” Varshavski pushes back.

“I wouldn’t give anyone milk chocolate,” Gundry immediately replies.

“Exactly, so why even bring the comparison?”

A couple of key words between Varshavski and Gundry’s 20-second back and forth that are worth highlighting and doubling down on. Nuance is the first word. Specifically, saying “that requires nuance” is perfect framing for different facts that get spouted off all the time in videos, especially around health. Yes, there is a comparable amount of sugar in a cup of grapes as there is in a Hershey’s chocolate bar, but the nuance behind that comparison stretches into healthy or unhealthy lifestyles, addictive qualities of one food versus another, and the type of sugars that are in grapes compared to those in chocolate bars. And nuance is another way of saying that the information someone is floating is misinformed or lacking context, which Varshavski positions as something that he’s trying to combat in a format that Gundry’s audience is already digesting.

Context and nuance within subversion of format is the key ingredient in trouncing misinformation for audiences who aren’t diving in deeper to a particular subject they come across in a 45-second video. In 2019, Jasmine McNealy, an associate professor at the University of Florida’s school of journalism, wrote that “news is data placed in context.” But that implies a list of rules, a commitment to the trade, that journalists operate under. Doctors didn’t pledge their lives to journalistic integrity; they took a hippocratic oath. Though they’re obviously not the same thing…they kind of are. Our trust in doctors came from an understood and shared belief that certifications made them experts enough in their field to help those without a decade-plus of school, but our willingness to go back again and again when we encounter questions about our health is firmly rooted in the same shared belief that doctors won’t lie.

Medical information is particularly volatile because of these platform shifts. There are more than 170 million daily active users on TikTok in the U.S., or about half of the country’s population. About 60 percent of that userbase is part of Gen Z, and more than 55 percent of Gen Z use TikTok for health information, with 33 percent of Gen Z using TikTok as their primary source of health information, a team at Zing personal training found in 2024. Part of the allure is the price tag of said information. Fitness freaks know that trainers are expensive.

Outside of wellness, one in eight 26-year-old Americans (or those who are no longer able to use their parents’ health insurance) do not have their own health insurance. There’s no subscription fee required to get information from TikTok on an issue that seems super specific to an individual but appears after a quick search query in the app. Who needs doctors or the New York Times when there’s someone with the word doctor in their username spouting off what sounds like solid advice to the tens of thousands or millions of people following their account?

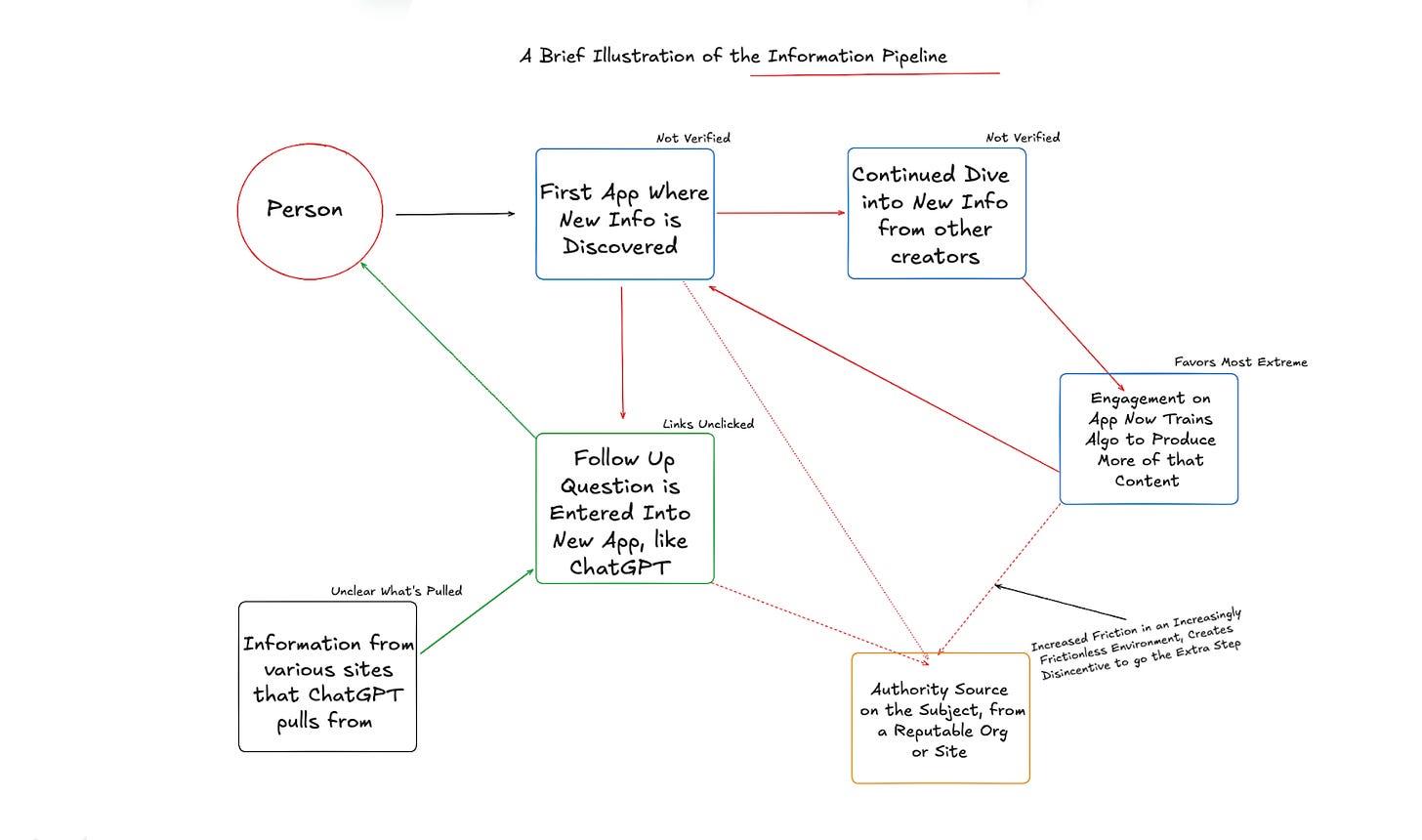

Significant parts of that promise are found in nuance. Data is one step toward finding the truth. But data without context doesn’t lead people to the answer, it leads them to scenarios, and scenarios are where theories are born. Theories are born from “if” questions, not necessarily more whys. If, for example, there is an equal amount of sugar in a cup of grapes as there is in a chocolate bar, then surely a chocolate bar is just as healthy. This is Varshavaski’s push back. Some people may stop and ask why more doctors haven’t said go ahead and eat Hershey’s bars instead of grapes if that were true, but many more may not. Play this scenario out a little further by investigating the typical information harvesting journey.

Gundry’s comparison between grapes and chocolate prompts the obvious question: “is there the same amount of sugar in a cup of grapes as there is in a chocolate bar?” I took the question to ChatGPT and, although the answer did get into the differences between the types of sugar by the end of the output it spat out, the immediate question was answered with a simple affirmation. There were no links to click on to double check the sourcing. What if the source ChatGPT was pulling from was just a regurgitation of Gundry’s own research?

None of this particularly matters. Around 20 percent of people who regularly consume news do so via TikTok, marking an increase of 17 percentage points since 2020, when TikTok first really took off in the United States. That’s higher than any other social media and video platform in the same period, per Pew Research, including YouTube, which jumped by 12 percentage points. Instagram saw a growth of nine percentage points, while text-first platforms, like X and Truth Social, dropped by three percentage and two percentage points respectively. Community driven apps, like Facebook and Reddit, also saw slight increases. It doesn’t take a genius analytical mind to pick up on the trend. People want less information from traditional sources, want their facts delivered as content, and want their information packaged as entertainment.

In the era of vibe truths, having someone like Doctor Mike, who uses the same tactics to subvert the misinformation he sees, and appear on feeds, is critical. It’ll only become more critical when generative AI information flows, as I wrote about at length in the last blog, lead to a never ending cycle of misinformation traveling at faster rates. As we enter the unknown, it’s important to keep in mind scarcity, amplifying, new business, and subversion. In a period of frustrating and scary unknowns, it’s good to find little bits of light and hope. Good, trusted information isn’t dead yet — and it may make a more impactful return before we all drown in slop.

What do you think? Is this enough to combat some of the increases in misinformation we'll see on our timelines? What directions do you think individuals, collective, outlets, and platforms should take?

Hint: the platform part of the equation is the focus of my next blog.

I think the cult of personality of internet personalities is a critical part of the information and misinformation we are experiencing today, but I'm from a pre-internet generation and as I was growing up I saw just as much poor medical advice, fad diets, and "folk-wisdom" coming from "trusted" sources. (Mostly supermarket magazines.) What I don't really understand is how humans process information, such as hearing that there's as much sugar in a cup of grapes as a Hershey's chocolate bar means I should eat more chocolate, instead of "maybe I should cut back on the amount of grapes I'm eating!" When people turn to these internet sources of information, what are they seeking? Do they want to solve a problem? Alleviate a concern? Do they want to be healthier? Lose weight? Validate the reasons they can't lose weight? Or is it simply a source of entertainment?

What you always seem to highlight so expertly is that in this new media landscape, engagement is the product. Does the social media medical expert really care if people are getting healthier, or is it more important to "challenge" the medical establishment to get more people to smash the like button?

In this new media world where attention is monetized, we don't incentivize the search for truth. And that's very sad to me.