Meta Wants to Pay You to Ruin the Internet

All it takes is 100% of your attention, a willingness to post, and a decreased sense of quality

Last year, a Wired report found that more than 173,000 videos from YouTubers with more than one billion collective subscribers were scraped by the largest artificial intelligence companies in the world to train their models. It’s a staggering number, but it doesn’t mean much until you look at the demand side of the generative AI equation. Companies like MidJourney report more than 16 million active users; Sora produces more than 10 generations per second, according to ZNet.

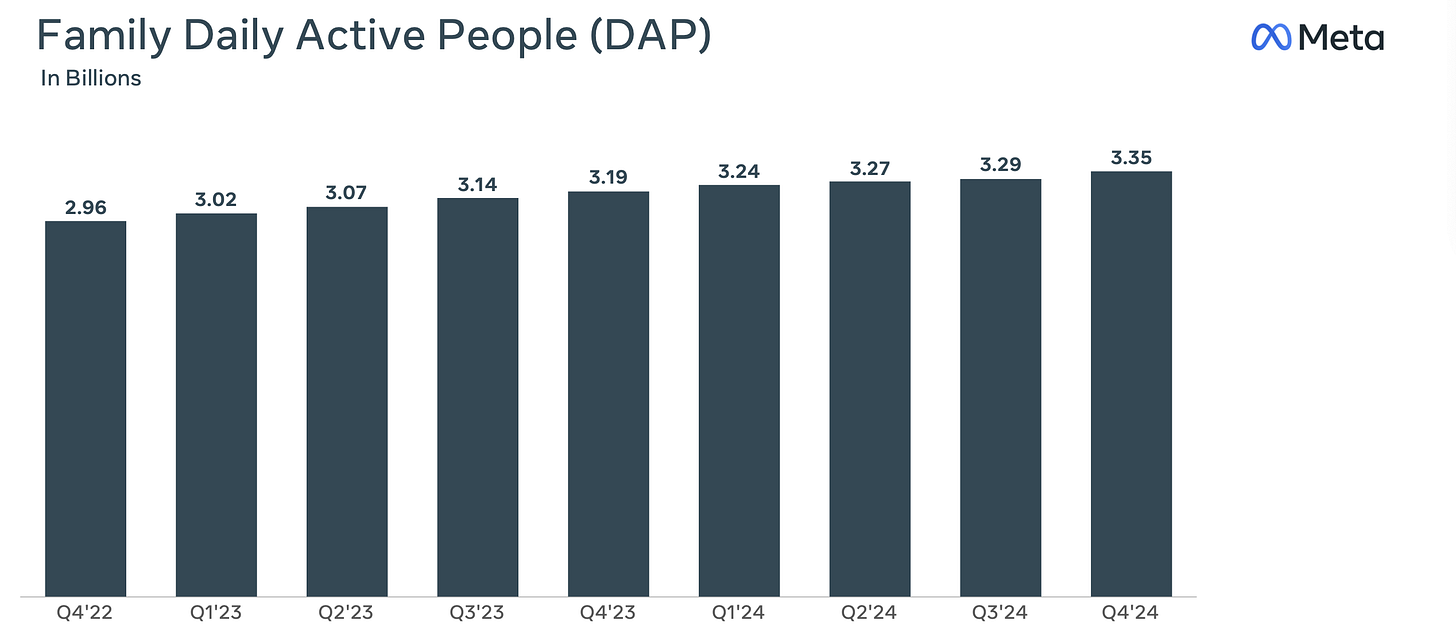

Those numbers are mind boggling — and profoundly disturbing from an ecological standpoint — on their own, but I was reminded of them while reading a few curious news stories last week. The first is that Meta’s family of apps now reach more than 3.4 billion people globally each day, and Meta is doubling down on its investment in artificial intelligence tools to further incentivize those users to engage (and effectively train) the company’s large language model, Llama.

The second is that Instagram is doubling down on prioritizing “original content,” and specifically original video, in its algorithmic recommendations. The third is that despite all conversations, TikTok doesn’t appear to be much closer to actually finding a U.S. partner, creating a significant hole in the market that almost all analysts agree will benefit Instagram the most. (Clearly, Meta does too, as seen by the company’s reported lobbying to ban TikTok.)

Although this final peg is from December, it’s integral to the aforementioned points: Facebook and Instagram trended up in usage among teens in the U.S. between 2023 and 2024. The only other “service” across major social media platforms in the U.S. that saw a bump in the same period was WhatsApp…also owned by Meta.

All of these little nuggets of information paint a picture showing what Meta wants to do; the data cements that Meta is in the unique position to enact those plans. You can see the company building the dominant social, short form video app, using original uploads from creators who are encouraged to use Meta’s proprietary generative AI tools, allowing Meta to better train its models and increase advertising revenue in the process.

Win-win-win for Meta across the board.

Considering there are reports that companies like OpenAI already use YouTube videos to train their LLMs, and knowing that companies like the New York Times are suing OpenAI for training its ChatGPT service off paywalled articles, the best move for a company like Meta (and Google) is to encourage creators to continue posting within its own controllable environment.

One final story to keep in mind before we move forward. Last week, the Copyright Office issued new guidelines around works produced with AI, noting that copyright protection won’t be awarded to works that use AI prompts. This is “true whether the prompt is extremely simple or involves long strings of text and multiple iterations,” according to The Verge. Now, two ways to read this. The first is that creators who want to use AI within their work and are thinking about going the full distance with that video to the point of trademarking won’t use AI as a primary creation tool. The second is that those who will use AI aren’t interested in copyrighting their work but will contribute to a platform like Instagram or YouTube for some kind of payment for their contribution.

All of this suggests that user generated platforms with generative artificial intelligence designs are in a particularly strong position. If they house content and pay creators for their work, creating an immediate incentive to work for the platforms instead of protecting their own work, then Meta and Google guarantee unprecedented access to videos for training purposes without having to worry as much about lawsuits.

As Posting Nexus readers know, my obsession is with how changes in technologies and incentive structures alter where we spend our attention, both voluntarily and involuntarily. Knowing this genAI dominance is a distinct possibility for Meta’s future — and Google’s YouTube — how does that impact what we end up watching? How does that impact our new baseline of quality for content we flock toward?

Perhaps most importantly, how does that impact the next era of our creator economy?

How People Watch Things Now

Sometimes underlying the obvious is the best way to illustrate what could happen. I’m going to do so in a few charts.

The first is the amount of time spent with traditional entertainment sources versus user generated content on short form video and video-on-demand platforms over the last 10 years. We can see in a perfect visualized summary that time spent with traditional video has decreased over the last 12 years, while time spent with digital video (including streaming) has increased over the same period. We know that audiences are spending less time in centralized systems, and we know they are seeking out easy to devour content.

The second chart showcases the increase of time spent with social video. As analyst Matthew Ball points out, it’s not that time spent with other user generated content platforms declined but new apps like TikTok added to time spent with those services. Naturally, that time has to come from elsewhere.

Gaming on iPhones and other devices is where Ball spends time with his analysis, but it’s also clearly eating into the number of subscriptions that people are willing to purchase (the third chart below)…

…especially when those platforms offer the same kind of undifferentiated entertainment (the fourth chart) and are easier than ever to cancel. Less time in the day for longer form content coupled with subscription fatigue naturally leads to reduced subscriptions and declines in engagement. Who benefits? TikTok…and now Instagram or YouTube.

I’m throwing quite a bit of data at you, so let me slow it down for a second. All of these data points paint a larger picture about the health of a paying customer base and why we’re seeing changes year after year. The first major takeaway is that quality as a reason for spending time with a certain type of entertainment format has diminished. Demand for high-quality entertainment hasn’t gone away, but consumers are saying they get an equal amount of value out of their time on TikTok or Instagram as they do with more traditional programming.

To put it even more bluntly, these are two different types of entertainment formats that used to not compete with each other when the access points were highly differentiated (theatrical, as one example). But in a direct-to-consumer universe, time served well by free and/or UGC platforms means there is less of a need to have access to multiple traditional entertainment suppliers.

It’s not that TikTok killed Netflix, but Netflix helped to kill cable and, in doing so, destroyed the perceived need for access to all 500 channels to fill holes of time.

Clearly there are new concerns facing a traditional Hollywood studio — and this is where artificial intelligence may theoretically help to maintain or increase perceived value of those higher end projects, increasing attendance at theaters or creating stronger connections to companies’ own direct-to-consumer offerings. I would argue most studios know that generative AI tools are particularly promising to the UGC platforms, so now the question is can those studios use the same tools to better differentiate their own titles?

Those within Hollywood are hedging their bets. A24 just hired Adobe’s chief strategy officer, Scott Belsky, to oversee all tech and innovation for the company. Sony is trying to hire an artificial intelligence ethicist, Netflix has a well-paid manager overseeing all of AI, and Lionsgate is encouraging its creatives to work with genAI company, Runway, on their own feature length projects. Lionsgate is also feeding about 4,000 of its own movies into Runway’s system to help better train the model for its own projects. And yes, much has been written about obvious concerns over how Runway will use that data outside of Lionsgate’s own work, or how tracking down any potential data spillover into non-Lionsgate projects would even be tracked down.

None of these moves particularly surprise me. Something Ball told Ben Thompson last week gets to the heart of the discussion. Consider this point from Ball:

“What we have used is advances in CGI to realize more sophisticated scenes, but more importantly, increase the number of scenes that are in a film without 10x-ing the cost. The expectation is very much that generative AI will likely drive that same outcome…The no man’s land is around $50, $70, $80, $100 million. The argument would be, yes, a $300 million film today might look like what would have been a $500 million film using generative AI, but now do we have film that today can barely use synthetic environments without going across $20 million, get up to $50 or $60 or $70 million mark.”

Ball isn’t the first to hypothesize this use case in using generative AI tools for filmmaking, and he certainly won’t be the last. Whereas streaming disrupted how audiences accessed content, splitting apart the centralized cable system to meet audiences in a post-pay TV age, generative AI seems to be held in the same regards as partnerships with technology providers like IMAX. It’s a change in the approach to production that will theoretically allow for more movies to be made or to produce more of a certain kind of movie at a reduced cost. I can not stress how much the word theoretically is working in that sentence.

Most executives in Hollywood are seemingly working with their creative partners to highlight the investment in AI as a tool to address monetary concerns rather than as a creative solution. This is not the same for companies like Instagram and YouTube, where generative AI exists beyond a tool. It is the creative solution. Keeping in mind all of the data and the trends those charts and figures highlight, how could generative AI and social video platforms’ impact on Hollywood continue over the next few years?

Do People Care?

I want to return to quality. It’s a spectrum. Today’s argument, based on the data we see, suggests that more and more people are happy to hover in the yellow of the quality meter.

Traditional film and television sits in the green. “AI slop,” as it’s come to be known, rests firmly in the red. That’s an issue for both active users and the platforms. Time spent on a platform is a data point that reflects willingness to spend time with a certain app, but actions performed on that app — or the ability for companies like Meta and Google to ensure that time spent is equivalent to actual impact for brand marketing or affiliate sales — are the more important part of the equation. Similarly, time spent on an app is seemingly tied to enjoyment of said app, but if that’s declining because of an onslaught of AI slop that people are encountering, erasing all value tied to connection or discovery, then time spent becomes a less important metric.

So, are people engaging with AI slop? Are users turning away from the relentless Shrimp Jesus posts? This reporting last year from 404 nails it for me:

The platform has become something worse than bots talking to bots. It is bots talking to bots, bots talking to bots at the direction of humans, humans talking to humans, humans talking to bots, humans arguing about a fake thing made by a bot, humans talking to no one without knowing it, hijacked human accounts turned into bots, humans worried that the other humans they’re talking to are bots, hybrid human/bot accounts, the end of a shared reality, and, at the center of all of this: One of the most valuable companies on the planet enabling this shitshow because its human executives and shareholders have too much money riding on the mass adoption of a reality-breaking technology to do anything about it.

Another story from 404’s Jason Koebler found that most of the AI slop dominating people’s Facebook feeds emerged from countries like India, Pakistan, Indonesia, Thailand and Vietnam “where the payouts generated by this content, which seems marginal by U.S. standards, goes further.” Koebler noted these posts “are an evolution of a Facebook spam economy that has existed for years,” adding that the “spam comes from a mix of people manually creating images on their phones using off-the-shelf tools like Microsoft’s AI Image Creator to larger operations that use automated software to spam the platform.”

Simply put, Facebook rewards these high engagement posts that are specifically designed to tap into those high reward systems, creating a zombie minefield of “engagement” with no actual socializing or quality time spent with the largest platform in the world.

It’s not that TikTok killed Netflix, but Netflix helped to kill cable and, in doing so, destroyed the perceived need for access to all 500 channels to fill holes of time

Whether or not people care enough about AI slop to remain on Facebook or turn to other platforms is the question of the hour. Ed Zitron has written about overall traffic to many of these websites diminishing over the last few years, arguing that people are walking away from shoddy online experiences. Reports about concerns at X over plummeting engagement have appeared over and over. Time spent with new platforms, like TikTok, have impacted more traditional social media sites, suggesting that younger generations of internet users aren’t satisfied with yesteryear’s largest sites.

Remember that a decade ago, 71% of teenagers in the United States between the ages of 13 and 17 used Facebook daily. By 2022, that number plummeted to 32%, with a relatively insignificant bump to 33% in 2023. Facebook head, Tom Alison, told Axios in June 2024 that 40 million teens in Canada and Facebook use Facebook daily, with Axios estimates putting that at roughly 19% of all daily active users in those territories. Young people not using a platform is a problem for advertisers, and that makes it a problem for Meta, which generates more than 95% of all revenue via advertising across its family of apps.

Facebook has plans to combat this growing problem. There are three main approaches: lean more on video, lean more on creators, and lean more on feeds that serve users what Facebook deems they want (shopping and entertainment) and less of what they don’t want (news and audio).

At the center of all these goals is the unspoken generative AI strategy. Facebook wants to showcase better shopping content and work with more advertisers? Generative AI can theoretically help in the short term. Ads will look less like ads, and more like content. As a result, content will look more like ads. That’s a nightmare reality, but data suggests that average users are fine with that shift. Meta wants to enable creators to spend more time and earn more on Facebook and Instagram? Introducing easy-to-use editing tools (like the one coming soon to Instagram) and incentivizing creators by continuing to pay out bonuses for highly engaged content regardless of quality brings into focus another generative AI pathway.

Ultimately, the more that people contribute to Meta’s AI database, the better Meta’s AI training gets. You can see the potential B2B business if the company’s genAI capabilities, thanks to all this data, becomes a leader in the marketplace. All of this is also true for Google and YouTube. It’s a race between two of the largest data collectors, two of the largest technology conglomerates, to beat the other to faster, shittier content.

Most of my concerns come down to one simple question: is it all or nothing? Will our feeds become nothing but rotten, thoughtless products of prompts that distract us just enough that we don’t care? Pure toilet fodder? Will it just become a part of our feeds that some are drawn to and others aren’t? Or will it become so intertwined with original content — human faces, actual storytelling — that our definition of quality will change to incorporate this new content form?

What’s Next?

Let’s not jump to conclusions, but we need to play out serious and seriously plausible scenarios concerning generative AI being weaponized on a dominant platform targeting an audience that has proven quality matters less and less. Deep fakes become an even bigger concern on a platform that is reducing its content moderation practices and all but eliminating fact checking.

Multiply this by hundreds of millions of people potentially using genAI in their posts to target specific feeds and expect bonus rewards…well, you get a worse and faster version of YouTube circa 2015. When growth at all costs defines strategies, and when products are deployed to meet that growth at speeds that allow for several “unintended consequences” to occur, you find yourself igniting an inevitable fire for your future self. As I wrote a few weeks ago upon Meta’s original content moderation pullback announcement:

Growth comes from subsuming. Devouring competitors, conquering neighbors, and controlling information. Inevitably, at some point, cracks begin to appear in the very foundation once the entity in question becomes too big. Non-stop growth always leads to self-immolation.

What’s unclear is whether Meta is too big to fail. Short form video isn’t a trend that’s going away, and it’s certainly nowhere close to inevitable that TikTok will return. Social commerce is increasing, and companies like Meta and Google have the best window through monopolization of working between advertisers and creators now connecting time spent on a platform to money earned. Ultimately, as I mentioned earlier, these are data collectors who will take every prompt, every image, and every comment on those videos or posts or transactions to beat competitors who don’t have the same access to data. Add in a suite of C.E.O. 's willing to kiss the ring of a president to ensure they’re left alone to build their future monopolies, and voila. Unavoidable harm.

Okay, time for a second to stop being a Debbie downer. I don’t believe Hollywood will disappear. Even if the level of quality people want from entertainment on their devices decreases, their expectation of quality on bigger screens increases. Generative AI may help studio executives produce faster movies, but so long as the quality control remains central, and so long as creatives remain at the forefront of their art, we may see a return to seeking out those higher value projects.

I also believe that audiences will seek out creators who are producing the best content. Most creators are not slingin’ AI slop just to make a buck. I’d wager Instagram chief Adam Mosseri wants to promote more original content because it’s crucial to the company’s goal of increasing time spent on the platform, and he knows that nothing but AI slop will drive people to others, like YouTube, which is home to the global creator base. Generative AI might help creatives produce slightly better looking videos, but I believe the creative body as a whole will beat out general AI slop every single time.

At the end of the day, so much of the future we want relies on our ability to better control where we give our most valuable commodity: our attention. If we don’t want to see high caliber, creative, popular art disappear, we must support the industries we took for granted. If we don’t want our feeds to be relentless AI slop, we must refuse to engage with what’s presented simply because it’s easy. We are a country that’s built on addiction. Short form video is entertaining as all hell; it’s also a quick fix for boredom and brains seeking out instantaneous dopamine releases. By assigning value to valueless things, we do nothing but ruin our own digital worlds.

We have a role to play in whether we choose to allow companies like Meta and Google to train the next generation of content we engage with based on our input and posts. It’s challenging — Facebook is the internet for so many countries, and YouTube is the largest video hosting site in the world plus the second largest search engine. But it’s also clear that generative AI, while expensive, will ultimately benefit these two companies first and foremost. If it benefits Meta, who does it hurt? I think of the answer the way we are taught to think about the underlying business plans of the companies who want to control what we see and how we talk to each other online: if you’re not paying for it, you’re the product. Stop being their training base, too.